Artificial Intelligence

The great complexity shift: Why AI agents don’t just simplify cybersecurity

Even as AI agents tackle cyber threats, they aren’t a security silver bullet. Explore why multi-agent systems shift complexity to new layers and what it takes to secure autonomous operations

Table of Contents

- The hidden costs of orchestrating multiple agents

- Agents as additions, not replacements to security layers

- Moving beyond black-box AI decisions

- Guardrails and policies: Keeping agents within bounds

- Adversarial testing for AI agents

- Hype vs. reality: The future of cyber workforce

- A layered approach and demanding evidence

Hack The Box just sponsored Microsoft Ignite, which introduced thrilling news, trends, and updates for autonomous security in the agentic era.

AI agents are rapidly becoming core players in enterprise security operations, with nearly half of Fortune 500 companies already deploying AI agents in production environments.

Security use cases rank among the top AI agent applications in most industry verticals. A recent report from Google, highlights how early adopters are reporting actual benefits; 67% of organizations experimenting with agentic AI have seen a positive impact on their security posture from generative AI.

The next evolution in AI-driven security might be the shift to AI autonomy from AI assistance, with agents now acting as extensions of security teams.

So agents are also evolving fast, but is the cyber workforce prepared for what’s coming?

On the surface, it sounds like a dream scenario for CISOs, SOC leads, and generally technical leaders: More autonomous workflows, faster threat response, and purely operational tasks offloaded to tireless bots.

What's not to love?

The hard truth that starts coming to the surface is that complexity can’t be removed, but just shifted to a different layer. No product plugged in with a large model will magically solve security problems.

In other words, multi-agent systems might make certain tasks look easy, but behind the curtain they introduce new layers of complexity that security leaders must manage. We are aiming to guide organizations through the strategic reality and the technical nuances of securing AI agents.

The goal is to separate the hype from reality and equip you with a clear-eyed view of what it takes to safely integrate autonomous agents into your cybersecurity stack.

The hidden costs of orchestrating multiple agents

Deploying a fleet of AI agents is not as simple as flipping a switch, and orchestrating them brings its own overhead. Here are some practices that are keeping teams working on agentic solutions awake at night, when complexity comes up again:

-

Multi-agent systems demand effort to keep agents in sync, updated, and cooperating. Each agent might have different versions or skills, which means version control and upgrades become a repeated process.

-

Complex logic for inter-agent coordination or an orchestration layer is needed (but hard to test) to manage workflows, handle failures or timeouts, and ensure consistency. A simple example: When one agent hands off tasks to another, who orchestrates that handoff?

-

Upkeep is another hidden cost. Agents need care and feeding: model retraining or fine-tuning, prompt updates, knowledge base refreshes, and performance monitoring. AI behaviors change over time.

-

Of course, the attack surface. A team of agents communicating with each other and with your systems opens new avenues for adversaries.

In short, multi-agent architectures don’t eliminate complexity. They redistribute it. Security teams must be prepared to handle complexity in a new form, and they need a safe, continuously updated environment to do it properly.

Agents as additions, not replacements to security layers

It’s critical to set the right expectation: AI agents are best viewed as an additional layer in your security architecture, not a wholesale replacement for traditional controls.

Agents can automate L1 analysis, scour logs, or even take containment actions. But that doesn’t mean you can turn off your EDR system or fire your threat hunters. In practice, the organizations seeing success treat agents as force multipliers that sit alongside existing defenses.

In companies taking AI-enhanced cyber operations strategically, specialized AI agents are being used to support key functions like malware analysis, detection engineering, and alert triage or investigation.

But even in these cases, the agents execute within predefined guardrails and play by the rules of your broader security stack and human feedback.

Moving beyond black-box AI decisions

One of the biggest red flags in how organizations treat an AI agent’s output as a black box “verdict” without capturing how it arrived at that outcome.

Traditional security tools often give binary results (malicious vs. benign, blocked vs. allowed), but behind those results are logs and data that engineers can audit.

AI agents need the same or greater level of telemetry.

In practice, this means instrumenting agents to emit rich logs, metadata, and reasoning traces as they operate.

For instance, if an agent summarizes an incident, log the raw inputs it was given (alerts, events) and the summary it produced. If an agent executes a remediation script, record what it attempted to do, which functions it called, and the results. This granular telemetry is indispensable for validating agent behavior and performing post-incident analysis.

Without detailed traces, teams would have little clue why an agent made a certain decision – a scary prospect if that decision has security consequences.

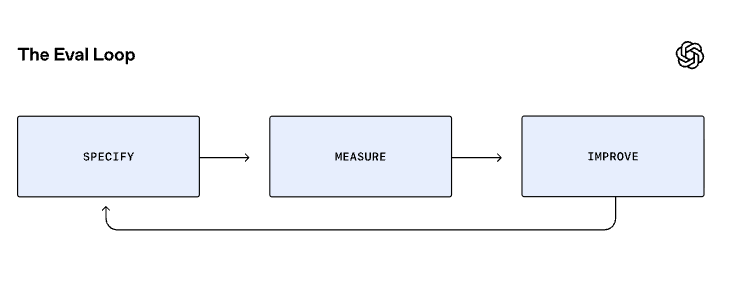

Guidance on AI evaluations reinforces this need for visibility. OpenAI recommends testing AI systems under real-world conditions and reviewing system behavior logs to catch failures and edge cases. Cyber teams should continuously measure what your agents are doing and how well they’re performing.

Robust observability also enables reproducibility and rollback. Regulators may eventually ask how an AI system arrived at a particular risk decision. With proper logging and traceability, you’ll be able to provide evidence, whereas a black box “AI magic” approach won’t cut it.

Guardrails and policies: Keeping agents within bounds

No matter how smart your AI agents are, they must operate under well-defined policies and guardrails. Human teams are meant to stay in the loop. Think of this as the “rules of engagement” for autonomous systems.

Enforceable guardrails common in many organizations include:

-

Action allow/deny lists: Define which operations agents can execute and which are off-limits unless a human approves.

-

Role-based scopes: Just as humans have roles and permissions, agents should be scoped to least privilege.

-

Human-in-the-loop: Require human confirmation or review for any agent-initiated action that could impact critical systems or data. This ensures oversight on the big decisions.

-

Rate limiting: Agents operate at machine speed. Put rate limits on how frequently an agent can perform certain actions to prevent runaway behavior or feedback loops.

By codifying these guardrails, AI agents are prevented from going beyond their intended scope. With the correct post-training and reinforcement learning, they can essentially become automated teammates following your organization’s security playbook to the letter.

Adversarial testing for AI agents

When introducing any new technology into security operations, we test it.

Adversarial testing is critical to understanding how these agents might fail under pressure from crafty attackers. Some key areas to keep under the scope:

-

Prompt injection and manipulation: This is where an attacker crafts input that causes an agent to behave in unintended ways. Red team your agents by feeding them malicious or tricky prompts and see if they can be coerced into breaking policy.

-

Data poisoning: If an agent learns from data, an attacker could attempt to poison that data. Does it have guardrails to detect anomalies in its inputs? Does it overly trust information that could be spoofed?

-

Jailbreak and misuse scenarios: Jailbroken agents can divulge information or perform actions outside their intended scope, even full AI-enabled espionage campaigns. For a security agent, this might mean seeing if you can trick it into revealing sensitive data it has access to, or to not log its actions, or to ignore a policy.

Collusion or inter-agent attacks: If you have multiple agents, test scenarios where one agent is compromised or malicious and see how it might mislead others.

Insights on Adversarial AI for Defenders & Researchers

We hosted an AI Red Teaming CTF together with HackerOne. Our findings offer a field‑based complement to red teaming benchmarks, clarifying which attack families dominate in competitive settings and where resilient defenses still frustrate skilled adversaries.

Adversarial testing should ideally be an ongoing practice, not a one-time audit. Just as attackers constantly evolve, your AI agents will face new types of threats.

Hype vs. reality: The future of cyber workforce

AI copilots are impressive tools for L0 and L1 analysts. They can digest an avalanche of alerts, suggest remediation steps, and even automate routine responses.

However, it’s crucial to temper expectations. Many organizations trying out AI agents quickly discover their telemetry and integration maturity isn’t where it needs to be. For instance, if your SOC doesn’t already centralize detailed logs, how will you feed complete information to an AI agent?

If you drop an AI agent into a weak security environment, it may become more of a liability than an asset.

It’s telling that, despite enthusiasm, organizations have not dismantled their security engineering teams or IR functions in favor of AI – but they are for sure about to radically evolve in terms of tasks and objectives.

A co-pilot that assists analysts can reduce workload and maybe handle the easy stuff, but it can also transform junior analysts into expert incident responders.

The narrative that “just add AI and you’re secure” is dangerously oversimplified. The reality is that AI agents augment well-prepared organizations; they don’t rescue unprepared ones.

As a security leader, make sure your team and budget reflect that reality – continue investing in skilled personnel, and proven defenses even as you pilot new AI tools.

A layered approach and demanding evidence

Treat AI agents like any other component in your security architecture: Secure the code and configurations, instrument heavily, enforce policies, and continuously test.

As AI agents start making more autonomous decisions, demand evidence for those decisions. In a world of autonomous systems, you should trust an agent’s recommendation only when it’s backed by telemetry and transparent reasoning that you or your team can verify.

In essence, measure before you trust. Insist on telemetry-backed evidence before acting on an agent’s verdict or allowing it to act on your behalf.

The age of AI agents in cybersecurity is undoubtedly exciting and holds great potential. Embracing it is likely to yield significant efficiency gains, but only for those who pair that embrace with strong discipline. AI agents as powerful allies rather than unpredictable wild cards in your cyber arsenal.

We are working to provide the perfect life, constantly updated environment to make the new era of cyber operations possible for humans teams and AI agents alike.