Artificial Intelligence

AI Red Teaming explained: Adversarial simulation, testing, and capabilities

With AI becoming mission-critical, it’s time to test your models like attackers would. Here is what CISOs and AI teams need to know to stay secure.

b3rt0ll0,

Jun 20

2025

Table of Contents

Artificial intelligence systems are becoming integral to business operations in every organization, from smaller tech companies to Fortune100 giants.

With that in mind, the crucial question is: how do we find and fix AI vulnerabilities before bad actors exploit them?

AI red teaming operations are attacking your own AI models and systems to identify weaknesses and improve their defenses. It’s a proactive approach to AI security that is rapidly gaining traction among forward-thinking organizations as well as more traditional ones.

Build resilience against AI-backed attacks

In this blog post, we’ll break down what AI red teaming really means and distinguish its three key aspects: adversarial simulation, adversarial testing, and capabilities testing. We’ll also see how these concepts come to life in the HTB content library, and connect the dots to real business stakes.

What is AI red teaming?

“Red teaming” traditionally means simulating real attackers to test an organization’s security. When it comes to AI, red teaming takes on some specific angles. Broadly, AI red teaming can be thought of in three categories, each addressing a different aspect of attacking AI systems.

Adversarial simulation

This is an end-to-end attack simulation using AI as part of the target. The red teamer creates a scenario mimicking a real threat actor, considering who the attacker is, what their goals are, and what capabilities they have.

Then they execute a multi-step attack against an AI-driven system from start to finish, as that adversary would.

For example, an adversarial simulation might involve emulating a cybercriminal trying to trick a bank’s fraud-detection AI while also phishing an employee. It’s holistic and scenario-based, giving defenders a realistic view of how a full AI-enabled attack would play out.

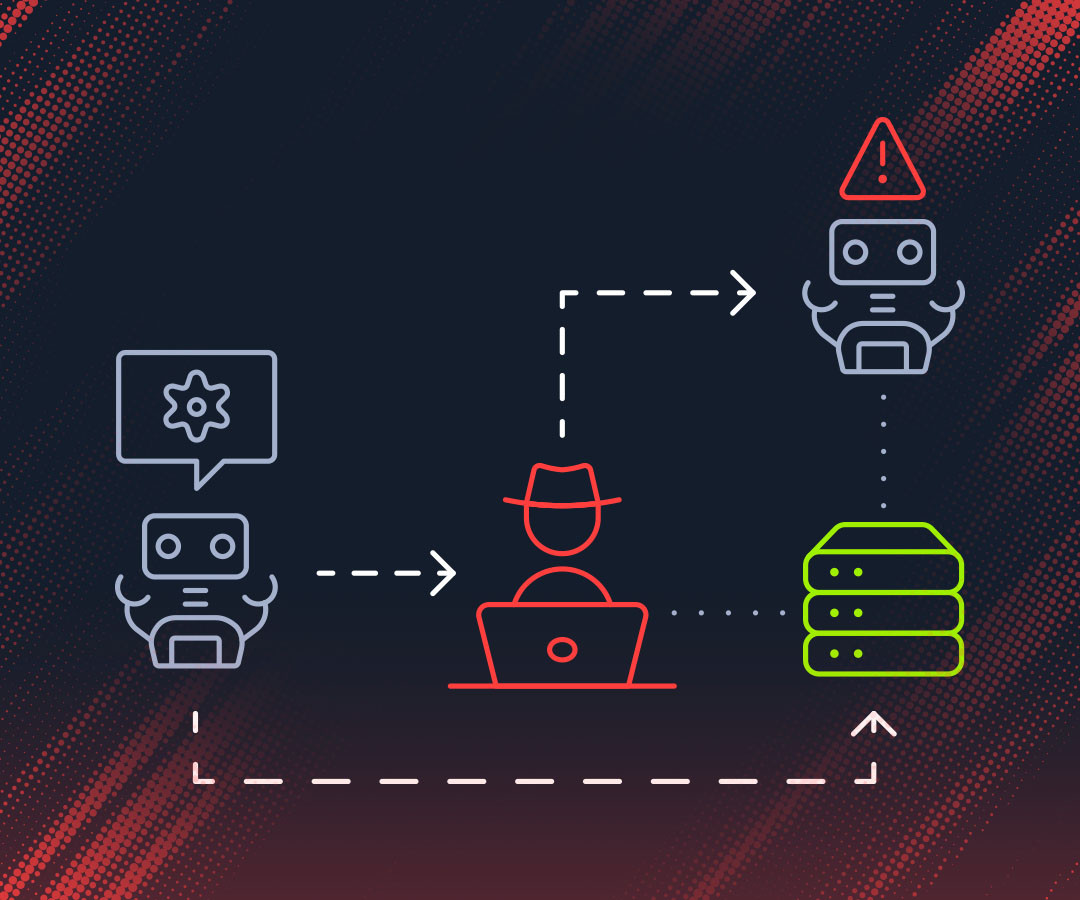

Adversarial testing

This is a targeted individual attack testing on AI models or components. Instead of a full scenario, adversarial testing zooms in on specific AI safety or policy violations.

The goal is usually to break the AI in a controlled way.

For instance, a tester might try a series of jailbreak prompts on a chatbot to make it produce toxic or disallowed content, or attempt to steal private data from a language model’s memory.

Each test is narrow (often targeting one vulnerability category like bias, privacy, or harmful output), but it’s done methodically to ensure the AI’s guardrails hold up. Adversarial testing is common when evaluating large language models’ content filters.

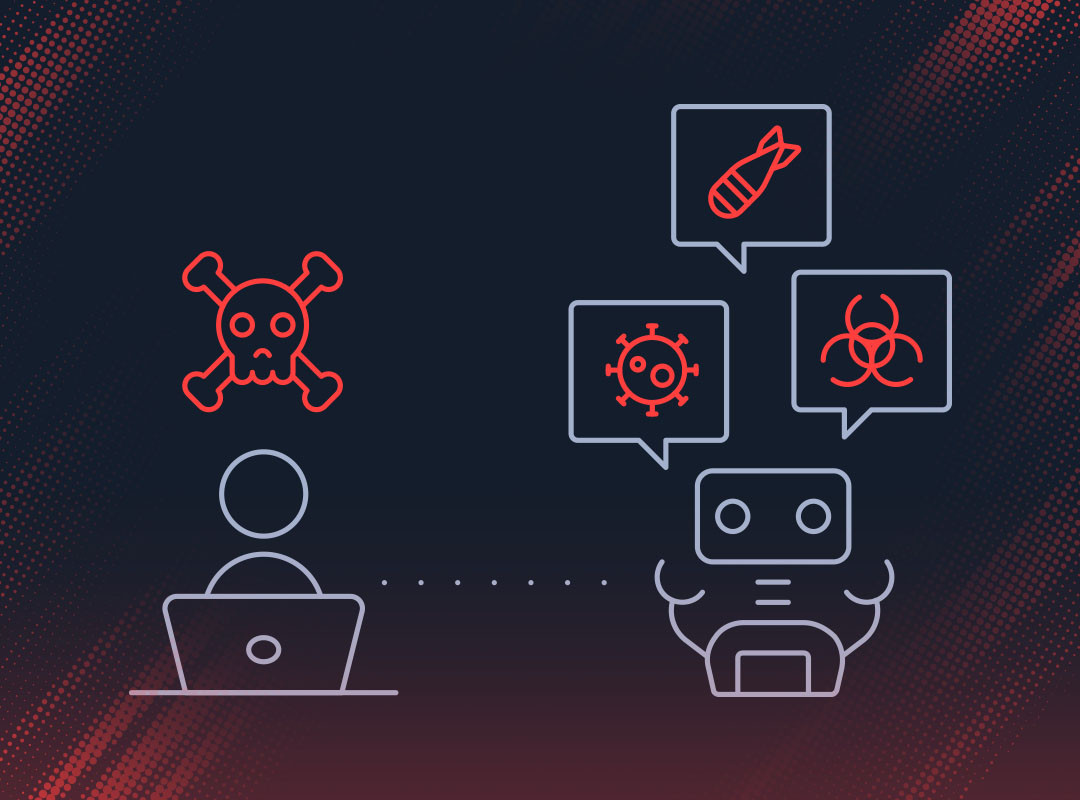

Capabilities testing

The goal is to uncover dangerous or unintended capabilities of an AI system. Sometimes AI can do things far beyond their intended purpose and we need to know if those hidden talents are risky.

In capabilities testing, red teamers push the AI to see if it can perform harmful tasks in areas like offensive security, fraud, or even biochemical instructions.

-

Can a generative model devise a new malware variant?

-

Could it give tips on building a weapon?

-

Can it convincingly persuade someone to do something harmful, or even self-replicate by generating its own code?

These are examples of dangerous capabilities one might test for. It does sound a bit like a sci-fi safety check—you’re exploring the outer limits of what the AI could do in the wrong hands. Any alarming ability uncovered here is a clue to put stricter control measures in place.

In summary, a comprehensive AI red team exercise may involve elements of all three: simulating a real attacker scenario, conducting targeted attacks on the AI’s defenses, and evaluating any dark capabilities lurking inside the model.

HTB’s recently introduced AI Red Teamer path in collaboration with Google is designed to teach security professionals how to approach all these operations.

The AI Red Teamer job-role path

Available on HTB Academy and HTB Enterprise Platform, this innovative curriculum is a structured and hands-on learning experience focused on a broad range of AI security knowledge domains. The program provides practical knowledge to:

-

Identify and mitigate adversarial AI threats, including data poisoning, model evasion, and jailbreaks.

-

Apply red teaming methodologies to evaluate AI security.

-

Understand best practices for AI security, aligned with Google’s Secure AI Framework (SAIF).

-

Engage in real-world attack simulations and hands-on labs.

The courses are designed to bridge the skill gap in AI security, ensuring that organizations have and can source trained professionals who can safeguard pivotal assets from AI-focused threats.

Once completed the path, cyber professionals will possess a deep understanding of core processes in AI red teaming: they get to practice the quick surgical strikes on AI (like prompt injections), the full-blown simulations (attacking an AI system as part of a larger network scenario), and the boundary explorations of AI abilities (uncovering what a model might do if prompted maliciously).

For any security professional or researcher looking to upskill in AI security, this kind of hands-on, wide-ranging training is invaluable—especially as AI technology rapidly evolves.

Why does AI red teaming matter for business?

“This is all cool hacker stuff, but does my business really need AI red teaming?”

Every organization embedding AI into its products or operations should be considering how to secure those AI systems, and red teaming is one of the best ways to do that proactively.

The latest headlines feature the leadership of JPMorgan Chase, one of the world’s largest banks, warning how the modern SaaS delivery model is quietly enabling cyber attackers.

“Fierce competition among software providers has driven prioritization of rapid feature development over robust security. This often results in rushed product releases without comprehensive security built in or enabled by default, creating repeated opportunities for attackers to exploit weaknesses. The pursuit of market share at the expense of security exposes entire customer ecosystems to significant risk and will result in an unsustainable situation for the economic system.” (Source)

When a bank or any major corporation deploys AI models widely (for lending decisions, fraud detection, customer chatbots, trading, etc.), it’s not just deploying software; it’s deploying something adaptive that may behave in unexpected ways.

The risk landscape expands.

AI red teaming directly addresses these concerns. By stress-testing AI models through adversarial simulations and attacks, an organization can identify vulnerabilities and failure modes before they result in financial or reputational damage.

For executives in any industry, if your company is leveraging AI at scale, you need to expand your security validation to include AI systems. The boardroom is starting to ask “how do we know our AI is safe?”

Three key suggestions for security leaders:

-

Institutionalize AI red teaming as part of your AI risk and control frameworks, ensuring your models are tested regularly under adversarial conditions.

-

Foster collaboration between AI agents and security teams to evaluate models not just for performance, but for resilience against misuse or manipulation.

-

Invest in workforce readiness to gain hands-on AI red teaming expertise, before regulators or adversaries force the issue.

Choose HTB to boost your cyber performance

You can access an extended content repository to emulate AI-based threats and benchmark your workforce against emerging threats. With more than 60% of cyber professionals fearing the use of AI to craft sophisticated attacks, there’s no more time like the present to get things moving.

Hack The Box AI training covers 80% of MITRE Atlas TTPs and is directly mapped to the Google Secure AI Framework (SAIF), making the most innovative curriculum available in the market. Get started today!