Artificial Intelligence

Beyond demos: How can enterprises evaluate AI SOC capabilities?

AI SOC agents are transforming security operations. Learn how to evaluate their real impact, and how Hack The Box AI Range helps benchmark their performance.

b3rt0ll0,

Jan 29

2026

Multiple different types of intelligent assistants that help automate and augment security operations have burst onto the scene, accompanied by claims on their capabilities and use cases.

Yet, according to Gartner’s 2025 Hype Cycle for AI in Cybersecurity, AI SOC agents sit right at the Peak of Inflated Expectations, rated “Embryonic” in maturity with only ~1–5% adoption so far.

Enthusiasm is high, but real-world proof and validation is scarce. Analyst research notes that while these tools claim to streamline many SOC tasks and augment staff, they remain largely unproven in practice.

Security vendors are racing to provide autonomous or agentic AI security solutions as a magic bullet for defense—a new type of gold rush. However, even with plenty of solid vendors, there’s considerable buzz and AI washing around full autonomy.

At Hack The Box, we want to help security leaders approach AI SOC agents with evidence of value rather than assumptions. Enterprises need to validate their capabilities and impact objectively before betting big on them.

Table of Contents

The current threat landscape is dictating AI in the SOC

AI has introduced an additional layer of volatility and complexity, enabling new attack techniques like deepfakes, automated phishing, and prompt injection exploits. Attackers are already weaponizing AI to scale their campaigns, sending thousands of tailored phishing attempts or probing targets at machine speed.

This puts enormous strain on defenders.

Even with skilled and battle-tested analysts, organizations are under constant pressure to scale threat detection, investigation, and response. A capable AI SOC agent could work 24/7, triaging low-level alerts, sifting through noise, and handling routine tasks—freeing human analysts to focus on the most critical threats.

When implemented correctly, it can speed up investigations, reduce errors, enrich context, and even help upskill staff by guiding them through complex analyses.

In theory, CISOs might have what it takes to make the boardroom finally happy. A technology that reduces operational cost, minimizes risks, and doesn’t require massive resource allocation on new headcount.

But these benefits only come if the capabilities are carefully validated, which brings us to the critical question: how can you evaluate and deploy AI SOC agents?

Key considerations when deploying AI SOC agents

Adopting agentic AI solutions introduce new complexities. Here are some key factors enterprises should consider:

-

Clear use cases and outcomes: Identify the SOC activities that consume the most time or are prone to human error (Tier I alert triage, false positive reduction, or incident report drafting) and target those for AI augmentation. An AI SOC agent should be evaluated on outcome-driven metrics. For example: “Did it reduce our mean time to resolution by X? Cut false alerts by Y%?”

-

Manage risks and set guardrails: According to industry analysts, over 50% of successful attacks on AI agents through 2029 will likely exploit access control gaps or prompt injection vulnerabilities. Mitigate these risks by defining what the AI is allowed to do (and not do) autonomously, sandbox its actions in a controlled environment, and monitor its outputs for errors or anomalies.

-

Establish governance and transparency: Develop governance policies for your AI agents (requiring periodic assessments, maintaining telemetry logs of all outputs, and ensuring explainability of its actions). Your team and stakeholders should understand at a high level how the AI is making decisions.

-

Keep humans in the loop: Decide how much autonomy to grant these systems. As trust and validation in the agent’s accuracy grows, you can gradually allow it to handle certain tasks end-to-end. Many organizations find a sweet spot in hybrid human-AI operations, where the AI handles volume and speed, and humans handle judgement and final calls. Continually re-evaluate autonomy levels as the technology matures.

The goal is to identify solutions that deliver real value—increased detection speed, reduced workload, improved accuracy—without introducing new holes in your security program.

How to validate AI capabilities with Hack The Box

So, how can you practically evaluate AI model capabilities and performance before you trust it in your live environment?

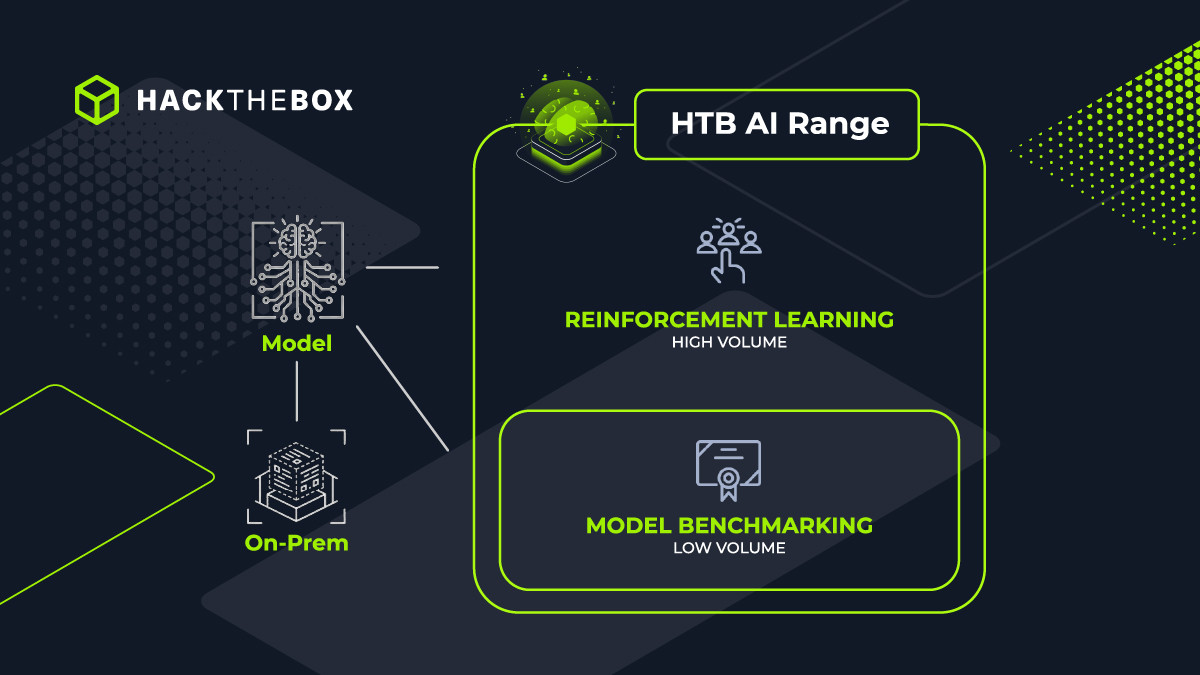

We have built HTB AI Range as the world’s first controlled AI cyber range for testing and benchmarking security AI agents in lifelike scenarios. A realistic cybersecurity proving ground where you can safely unleash your AI and get in-depth data on how it performs.

1. Continuous, realistic validation

HTB AI Range mirrors the complexity of enterprise networks and threats. Instead of one-off vendor demos or lab tests, you can observe your AI agent being continuously exposed to unseen scenarios: This prevents the model from simply memorizing a static test and minimizes the risk of overfitting

2. Telemetry and measured outcomes

The platform logs every action your AI agent takes; every command, detection, alert, or error. This rich telemetry enables granular scoring of the models’ performance. The scoring methodology on HTB AI Range reflects not only success rates but also the agent’s strategy, efficiency, and adherence to security policies.

For security leaders, this means you get a quantified, evidence-backed view of an AI agent’s strengths and weaknesses; essentially a report card of its true skills.

3. Benchmarking and comparison

Much like the classic HTB competitive hacking leaderboards, we provide visibility on how different AI models stack up against each other. For enterprise security teams, this transparency turns claims into data.

Whether you’re evaluating third-party products or comparing your in-house AI project to industry standards, the AI Range leaderboard provides objective evidence of where each solution stands.

4. Risk mitigation and confidence building

HTB AI Range addresses qualitative concerns like risk and trust by validating its safety mechanisms (for instance, does it ever attempt an action outside its allowed permissions?). Via high-volume concurrent sessions, models continuously prove and improve security capabilities while AI researchers find a rapid R&D ramp for reinforcement learning.

In summary, HTB AI Range provides the practical gateway to advance agentic AI in cybersecurity, and answer the crucial question: “Can this AI SOC agent actually deliver in the real world?”

If you are exploring how to adopt AI tools based on evidence and augment your cyber defense capabilities, evaluations and ongoing validations can’t be left aside. Book a HTB AI Range demo with your team to get started.